A Comparative Analysis of Heuristic and Usability Evaluation Methods

A Comparative Analysis of Heuristic and

Usability Evaluation Methods

Elizabeth J. Simeral and Russell J. Branaghan

Usability testing and heuristic evaluation are two methods for detecting usability problems, or bugs in software user interfaces. Usability testing identifies bugs that impair user per$ormance.It provides a realistic context for the product evaluation, whereas heuristic evaluation does not.Further, it provides an estimate of bug severity, while heuristic evaluation does not. To its detriment, usability testing is more expensive and time intensive than heuristic evaluation. It also tends to overlook bugs that may not affect user pelformance but may negatively impact the user’s perception

In a classical usability test,

representative users conduct realistic tasks with an

operational product or partially operational prototype. At the same time, an experimenter keeps track of the

participant’s success rate, errors, task time and https://www.sodocs.net/doc/018380277.html,ability bugs are discovered when some aspect of the system prevents the user from successfully completing his or her tasks in a timely manner. In practice, various usability specialists have customized their testing

techniques to capture user satisfaction data in addition to the user performance data. In the past ten years, variations of this method have grown in popularity. Now, many

major software companies have their own usability labs and usability departments.

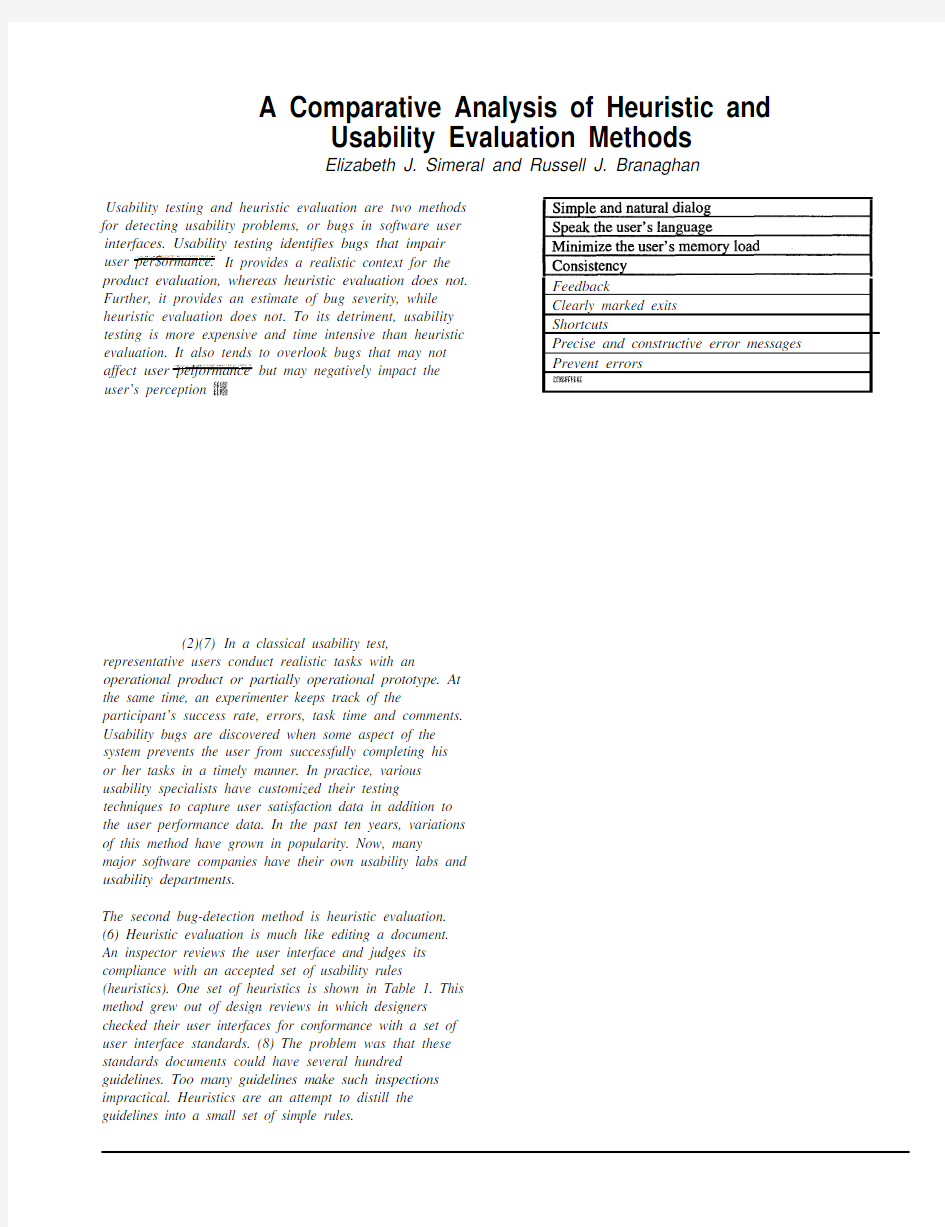

The second bug-detection method is heuristic evaluation.(6) Heuristic evaluation is much like editing a document.An inspector reviews the user interface and judges its compliance with an accepted set of usability rules

(heuristics). One set of heuristics is shown in Table 1. This method grew out of design reviews in which designers

checked their user interfaces for conformance with a set of user interface standards. (8) The problem was that these standards documents could have several hundred

guidelines. Too many guidelines make such inspections impractical. Heuristics are an attempt to distill the guidelines into a small set of simple rules.

Feedback

Clearly marked exits Shortcuts

Precise and constructive error messages

Prevent errors

HEURISTIC EVALUATION

The chief strength of heuristic evaluation is speed and affordability. (3) In our experience, an average heuristic evaluation takes about one and one-half hours. Because we usually use six evaluators, we can learn a great deal about the usability of a product in just one day.

One weakness of this method is that it may produce false positives. An example of a false positive is a minor bug that does not negatively impact user performance or the user’s perception of product quality. The danger is that developers may spend valuable time addressing bugs that do not impact user performance or satisfaction to the exclusion of those that do.

A second weakness is that heuristic evaluation does not approximate the conditions under which real users would use the system. Heuristic evaluation does not take place in the context of a real user task; therefore, its results may not be as valid as those derived from a usability test. Moreover, these results may be more difficult to defend than those uncovered in a usability test.

Thirdly, heuristic evaluation provides little information about the magnitude of the problems that are detected. It simply indicates that a particular aspect of the product may have a usability problem. Conversely, a usability test provides you with an appreciation of the problem’s severity.

Finally, it is doubtful that the heuristics themselves are helpful in detecting bugs. In our experience, the evaluators rely on common sense and previous experience. In fact, in a recent evaluation, we found that the most common bug category detected did not fit into any of the heuristics in the list. This is in agreement with other research which found that evaluators did not believe that the specific heuristics were helpful.

58-59, October 1991.

(2)

(3) Jeffries, R., Miller, J. R., Wharton, C., and Uyeda, K. M., “User Interface Evaluation in the Real World: A Comparison of Four Techniques,” Proceeding ACM CHI 91’ Conference, pp. 119-124, 1991.

(4) Karat, C. Campbell, R. L.

interface evaluation,” Proceeding ACM CHI ‘92 Conference, 397-404, 1992.

(5) Molich, R.

Computer Dialog,” Communication of the ACM

Sons, 1994.

(7)

Sons, 1994.

(8) Smith, S. L.

Elizabeth Simeral

Symix

2800 Corporate Exchange Drive

Columbus, OH 43231

Elizabeth Simeral has over i B-years experience

within the software industry. Currently with Symix,

she heads up the product usability engineering

program. Prior to joining Symix, Elizabeth was an

independent contractor, a sales representative for

CMHC Systems, and a product developer for Goal

Systems. She has developed a variety of

Theory and Research