A Scatter Search Approach for the Minimum Sum-of- Squares Clustering Problem Abstract

A Scatter Search Approach for the Minimum Sum-of-

Squares Clustering Problem

Joaquín A. Pacheco

Department of Applied Economics, University of Burgos,

Plaza Infanta Elena s/n BURGOS 09001, SPAIN

Tf: +34 947 259021; fx +34 947 258956; email: jpacheco@ubu.es

Latest version: September 19, 2003Abstract

A metaheuristic procedure based on the Scatter Search approach is proposed for the non-hierarchical clustering problem under the criterion of minimum Sum-of-Squares Clustering. This algorithm incorporates procedures based on different strategies, such as Local Search, GRASP, Tabu Search or Path Relinking. The aim is to obtain quality solutions with short computation times. A series of computational experiments has been performed. The proposed algorithm obtains better results than previously reported methods, especially with small numbers of clusters.

Keywords: Clusterization, Metaheuristics, Scatter Search, Local Search, GRASP,

Tabu Search, Path Relinking

1. Introduction

Consider a set X = {x 1, x 2, ..., x N } of N points in R q and let m be a predetermined positive integer. The Minimum Sum-of-Squares Clustering (MSSC) problem is to find a partition of X into m disjoint subsets (clusters) so that the sum of squared distances from each point to the centroid of its cluster is minimum. Specifically, let P m denote the set of all the partitions of X in m sets, where each partition PA ∈ P m is defined as PA = {C 1,C 2, ..., C m } and where C i denotes each of the clusters that forms PA . Thus, the problem can be expressed as:

∑∑=∈∈?m i C x i l PA i l m x x 12 min P ,

where the centroid i x is defined as

l C x i i x n x i l ∑∈=

1, with n i = |C i | .The problem can be written as

∑=?N l k l l

x x 12min ,

where k l is the cluster to which point x l belongs.

The design of clusters is a well known exploratory Data Analysis issue called Pattern Recognition. The aim is to find whether a given set of cases X has some structure and,in if so, to display it in the form of a partition. This problem belongs to the area of Non-Hierarchical cluster design, which has many applications in economics, social and natural sciences. It is known to be NP-Hard [5].

Various exact methods for MSSC can be found in the literature (see, for example [17] and [7]), some of them, such as the method proposed by du Merle et al. [8], have succeeded in resolving problems with up to 150 points. For larger-sized problems the use of heuristic algorithms is still necessary. The most popular are those based on Local Search methods, such as the well-known K-Means [15] and H-Means [14] procedures. In a recent work, Hansen and Mladenovic [13] propose a new Local Search procedure, J-Means, along with variants H-Means+ or HK-Means. In recent years algorithms using Metaheuristic strategies have been designed, such as Simulated Annealing [16], Tabu Search [2], Genetic Algorithms [3] or most recently Variable Neighborhood Search or VNS [8], [13] and Memetic Algorithms [19].

An algorithm that is able to obtain good solutions in short times is proposed for this problem. This method is based in the a recent Metaheuristic strategy named Scatter Search (SS). This method also incorporates others procedures based in others methods, such as Local Search, Tabu Search, GRASP and Path Relinking. This Scatter Search approach is analyzed and compared with other recents techniques. In all cases, our proposed technique gives adequate solutions, compared with others recent techniques, in reasonable time, especially with small values of m.

2. Solution Approach

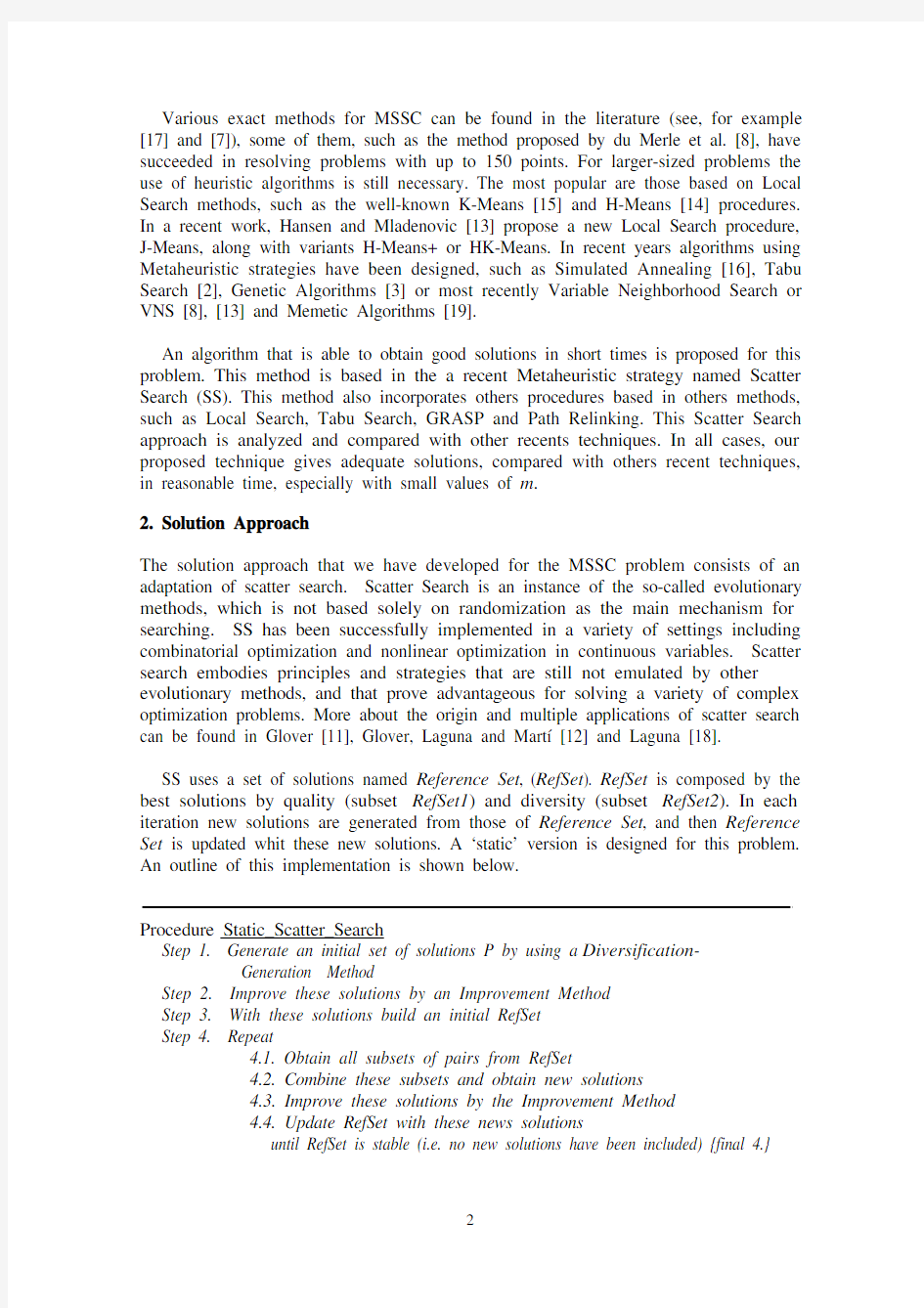

The solution approach that we have developed for the MSSC problem consists of an adaptation of scatter search. Scatter Search is an instance of the so-called evolutionary methods, which is not based solely on randomization as the main mechanism for searching. SS has been successfully implemented in a variety of settings including combinatorial optimization and nonlinear optimization in continuous variables. Scatter search embodies principles and strategies that are still not emulated by other evolutionary methods, and that prove advantageous for solving a variety of complex optimization problems. More about the origin and multiple applications of scatter search can be found in Glover [11], Glover, Laguna and Martí [12] and Laguna [18].

SS uses a set of solutions named Reference Set, (RefSet). RefSet is composed by the best solutions by quality (subset RefSet1) and diversity (subset RefSet2). In each iteration new solutions are generated from those of Reference Set, and then Reference Set is updated whit these new solutions. A ‘static’ version is designed for this problem. An outline of this implementation is shown below.

Procedure Static_Scatter_Search

Step 1. Generate an initial set of solutions P by using a Diversification- Generation Method

Step 2. Improve these solutions by an Improvement Method

Step 3. With these solutions build an initial RefSet

Step 4. Repeat

4.1. Obtain all subsets of pairs from RefSet

4.2. Combine these subsets and obtain new solutions

4.3. Improve these solutions by the Improvement Method

4.4. Update RefSet with these news solutions

until RefSet is stable (i.e. no new solutions have been included) {final 4.}

Step 5. If max_iter iterations (Steps 1-4) elapse without improvement stop else return Step 1

Figure 1. SS implementation

Let’s denote by n_pob the size of P (Step 1). Also let’s denote by b1 and b2 the sizes of RefSet1 and RefSet2.

For building the initial RefSet (Step 3) first the best solutions (by quality) are taken from P. Then, the next solutions are added to RefSet by diversity. For that, the following measure of diversity is used. Let λ be a solution in P \ RefSet, we define

δmin(λ) = min {dif(λ,λ’) / λ’∈RefSet};

where dif(λ,λ’) = number of assignments in λ that are different from λ’. We then select the candidate solution λ that maximizes δmin(λ).

The updating of the RefSet is based only on quality. That is, only the new solutions that improve the quality of the worst solution in RefSet are added, (Step 4.4). We now provide descriptions of the diversification, improvement and combination methods.

2.1 Diversification Method

Our diversification method is based on GRASP constructions. GRASP, or greedy randomized adaptive search procedure, is a heuristic that constructs solutions with controlled randomization and a greedy function. Most GRASP implementations also include a local search that is used to improve upon the solutions generated with the randomized greedy function. GRASP was originally proposed in the context of a set covering problem [9]. Details of the methodology and a survey of applications can be found in Feo and Resende [10] and Pitsoulis and Resende [20].

The method proposed in this paper consists of two stages.In the first, a subset S with points from X and sufficiently far from each other (seed-points) is built.This is denoted as S = {x s1, x s2, …, x sm}.We also use m one-point clusters, corresponding to the seed points, i.e., C i = {x si}, i=1,…,m. In the second stage, the remaining points are assigned to different clusters according to the distance to their centroids.The set S is determined as follows:

-Determine x j*, the farthest point from the centroid of X, and do S = {x j*}.

-While |S| < m do:

1 Calculate ?j= min{||x j- x l|| : x l∈S}, ?x j?S.

2. Calculate ?max = max { ?j : x j?S }

3. Build L = {x j / ?j≥α?max }

4. Choose x j*∈L randomly and do S = S∪ {x j*}.

The α parameter (0 ≤α≤ 1) controls the level of randomization for the greedy selections. Randomization decreases as the value of α increases. This controlled randomization results in a sampling procedure where the best solution found is typically

better than the one found by setting α = 1. A judicious selection of the value of αprovides a balance between diversification and solution quality.

The first time that the diversification method is employed (Step 1 in Figure 1), there is no history associated with the number of times each point has been selected as seed point. However, this information is valuable when the method is applied to rebuild the reference. The information is stored in the following array:

freq (j ) =number of times that point x j has been selected to belong

to S in the execution of Step 0 and previous executions of

Step 8 of Figure 1.

The information accumulated in freq (j ) is used to modify the ?j values in the application of the first phase of diversification method. The modified evaluation is:

()max

max freq j freq j j ???=?′βwhere freq max = max { freq (j ) : ? i }. The modified j ?′ values are used to calculate ?’max and execute the diversification method. Large values of β encourage the selection of seed points that have not been frequently made. The use of frequency information within a diversification method is inspired by Campos, et al. [6].

In phase 2 of our diversification method, the points of X \ S are assigned as follows:

1. Set A = X \ S , (A = Set of unassigned points)

2. For each point x j ∈ A and for each cluster C i , i = 1, …, m , calculate the increase in objective function ?ij that results from assigning point x j to cluster C i . This value Γij is calculated as follows:

21

j i i i ij x x n n ??+=Γwhere i x is the centroid of C i and n i = |C i |.

3. Calculate Γi*j* = min { Γij : x j ? A , i = 1, …, m }

4. Assign x j * to C i * and set A = A – { x j* }

5. If A ≠ ? return to 2, else stop.

2.3 Improvement Method

As an improvement method the H-Means+ algorithm [13] is used together with a simple Tabu Search procedure. The H-means+ algorithm is a variant of the well-known H-Means algorithm [14].

The Tabu Search procedure has been proposed by Pacheco and Valencia [19] and is described shortly below. This Tabu Search procedure uses the neighboring moves employed in K-Means. These moves consist at each step in the movement of an entity from a cluster to a different one. In order to avoid repetitive cycling when a move which consists in moving point x j from cluster C l to cluster C i is performed, point x j is

prevented from returning to the cluster C l for a certain number of iterations.Specifically, define

Matrix_tabu (l , j ) = the number of the iteration in which point x j leaves cluster C l .The Tabu Search method is described below, where P denotes a initial solution with a value f . The parameter τ indicates the number of iterations during which a point is not allowed to return to the leaving cluster. The parameter κ indicates the maximum number of unimproved iterations. In our case we use the stop criterion κ = 100. After different tests, τ was set as m .

Tabu Search

1 Do Matrix_tabu(i,j) = – τ, i =1,...,m, j = 1,…,N

2 Do δ = 0 and P* = P, f* = f and η =0;

3 Repeat

(3.1) δ = δ + 1

(3.2) Determine v i*j* = min {v ij / i =1,...,m; j = 1,...,N ; x j ? C i verifying

niter > Matrix_tabu (i,j) + τ or

f + v ij < f* (‘aspiration criterion’)}

(3.3) Reassign x j* to C i* ;

(3.4) Do Matrix_tabu (l*,j*) = δ (l* being the previous cluster of x j*);

(3.5) If f (the value of the current solution P) < f* then do: P* = P, f* = f and η = δ ;

until (δ – η > κ) or another termination criterion

Where v ij is the change in the value of the objective function when x j is reassigned to C i . The following formula is obtained from Sp?th [22] to simplify the calculations in K-Means. Let C l be the cluster to which x j belongs, then the value of v ij is calculated as follows:

221

1j l l l j i i i ij x x n n x x n n v ??????+=.2.4 Combination Method

New solutions are generated from combining pairs of reference set solutions. The number of solutions generated from each combination depends on the relative quality of the solutions being combined. Let λi and λj be the reference solutions being combined,where i < j . Assume, as before, that the reference set is ordered in a way that λ1 is the best solution and λb is the worst. Then, the number of solutions generated from each combination is: 3 if i ≤ b 1 and j ≤ b 1, 2 if i ≤ b 1 and j > b 1 and 1 if i > b 1 and j > b 1.

Each subset with two elements of RefSet is used to generate new solutions. To do so, we use a strategy called Path Relinking. The basic idea is as follows: a path to join the two initial solutions is built. A number of intermediate points (solutions) from the ‘path' or ‘chain’ are selected as new solutions. The aim is for the intermediate points or solutions to be as equidistant as possible from each other. The improvement method described in Sect. 2.2 is then applied to these intermediate solutions. Figure 2 depicts this idea .

λi

λj

λ*λ**

Figure 2.- Generation of New Solutions by using Path Relinking

From every pair of solutions of the reference set, in figure λi and λj , a path to join them is built. Solutions in these intermediate preselected positions within the path are selected and improved. In this way new solutions are generated, (in figure λ* y λ**).In order to build the path that joins λi and λj , points with different clusters are selected in the different steps and the shift is carried out. At each step the best possible shift is selected. In this way, the intermediate solutions in each step have another element in common with λj .

Path Relinking is a strategy traditionally associated with the intensification phase of the Tabu Search. The underlying idea is that in the path between two good solutions there should be solutions of similar quality (in some cases, even better solutions). See Glover, Laguna and Martí [12] for more details.

3. Parameter Fine Tuning

One of the most time consuming tasks in the development of metaheuristic procedures for optimization is the tuning of search parameters. Adenso-Diaz and Laguna [1] propose an automated parameter tuning system called CALIBRA that employs statistical analysis techniques and a local search procedure to create a systematic way of fine-tuning algorithms. The goal of CALIBRA is to provide an automated system to fine-tune algorithms, where a user needs only to specify a training set of instances and a measure of performance.

CALIBRA works with a set of problem instances and a range of values for each search parameter. The procedure utilizes design of experiments and heuristics to search for the best parameter values. The quality of a set of values is tested on the specified set of problem instances. CALIBRA is available at opalo.etsiig.uniovi.es/~adenso/file_d.html ,where a user’s manual can also be found.

Our complete set of test problems consists of 15 instances, as we point out in Section

4. We selected a relatively small training set of 3 problems, because we determined that this sample was representative and that the parameter values found with CALIBRA would also perform well when applied to the entire test set. The parameters to be adjusted were α and β in the (0.1, 0.9) range and b 1 and b 2 in the (3, 7) range. The values for MaxIter and PSize were set to 2 and 20, respectively. CALIBRA obtained

the following values, after running for approximately 5 hours on a Pentium III machine at 600 MHz: α = 0.8, β = 0.5, b1 = 5 and b2 = 5.

Although a computational time of 5 hours may seems excessive, it actually represents a very reasonable effort when compared to manual parameter fine-tuning. We use these parameter values for all of the experiments reported in Section 4.

4. Computational Experiments

To compare the efficiencies of our Scatter Search algorithm and those of other recent strategies, a series of tests is performed. Next the results of this set of computational experiments using these algorithms are shown. The following algorithms are tested: Memetic-HK:Memetic Algorithm proposed in Pacheco and Valencia [19],

HybMem:Hybrid Algorithm proposed in Pacheco and Valencia [19],

VNS-HK:the VNS Algorithm, proposed in Hansen and Mladenovic [13],

with HK-Means as the Local Search method

VNS-J:the VNS Algorithm, with J-Means as the Local Search method.

SS:Scatter Search Algorithm proposed in Section 2.

For this work we have used our own implementation of every algorithm. The HybMem algorithm uses an initial population generated by the greedy-random method described in Beltrán and Pacheco (2001). The VNS-HK and VNS-J algorithms use the best of these solutions as the initial solution.

The sets of problems instances used in testing are:

(i)the 575

(ii)1060 and

(iii)3038 points of the plane taken from TSPLIB [21] data base.

All the tests in the current work are performed on a personal computer with a Pentium III 600 MHz processor. All the algorithms have been implemented in Pascal using Borland Delphi 5.0.

In (i) the running time is limited to 300 seconds for all the algorithms. We set m = 5, 10, 15, 20, 30, 40, … and 100. In tables 1 the solutions obtained for each algorithm are presented.

Table 1. Results for 575 TSPLIB

m Memetic HybMem VNS-HK VNS-J SS

52497887,42497887,42497887,42497887,42497887,4

101110033,791110033,791110033,791110033,791110033,79

15728530,353728530,353728530,353728530,353728530,353

20531939,613531913,582531913,582532170,717531913,582

30348880,815349160,661348823,56351480,12348563,429

40256905,514256005,663255201,708255576,465254968,531

50198428,047196869,891197830,014198256,332196087,801

60158411,762157935,431156756,025157871,603156611,979

70132176,153130547,63129276,624131955,541128863,021

80111539,837111539,837109963,018111344,912110066,16

9097267,41896557,81394828,3896896,90694858,468

10085107,01784710,19282735,24984502,78182551,242

In (ii), we set m = 10, 20, 30, ..., 150. These test data were previously used in Hansen and Mladenovic [13], where the best solution known for every value of m, (except m = 40) is reported. These were obtained on a SUN Ultra I System workstation with 10 minutes computation time. The running time is limited to 600 seconds for all the algorithms. In table 2 the solutions obtained for each algorithm and the percent deviation with respect to the best-known solution (dvt) are presented.

Table 2. Results and percent desviation respect to the previous best know solution.

m Memetic HybMem VNS-HK VNS-J SS

10

17548402141754840214175808949717580894971754840214

dvt : 000,1850,1850 20

791794596791794596791794596791794596791794596

00000

482123632481369435489705165489705165481251643 30

0,1810,0251,7571,7570

347420992343999852341675604352788332341342886 40

-----

260361695259291682257371638261266903256426137 50

1,8991,480,7292,2540,359

202854402202211137201649998202070992197376472 60

2,8292,5032,2192,4320,052

163880189161259691159593209160880193158450591 70

3,4271,7730,7211,5340

134082965133270032130401981134420821129315712 80

4,0293,3981,1734,2910,330

114081006114035202110417656113014913110705227 90

3,3183,27702,3530,261

99766477,899581387,896671404,598925896,796860491,7 100

3,5163,3240,3042,6440,500

88519719,887146237,28502619288392643,785304193,3 110

4,332,7110,2134,180,540

78641016,678641016,67614941377889227,476554412,7 120

4,0234,0230,7273,0281,263

705148777051487768576048,770217658,168466017,6 130

4,3834,3831,5133,9431,350

64483367,164230385,562148721,962668365,561623521,4 140

5,55,0861,682,5310,821

58823525,558344170,256130419,657963193,956211593,8 150

5,1824,3250,3663,6430,511 In (iii), we set m = 10, 20, …, 50, 100, 150, ..., 400. These test data were previously used in Hansen and Mladenovic [13], where the best solution known for every value of m, is reported. These were obtained on a SUN Ultra I System workstation with 50 minutes computation time. The running time is limited to 600 seconds for all the algorithms. In table 9 the solutions obtained for each algorithm and the percent deviation with respect to the best-known solution (dvt) are presented.

Table 3. Results and percent desviation respect to the previous best know solution.

m Memetic HybMem VNS-HK VNS-J SS

10

560251191560251191560251191560251191560251191

00000

m Memetic HybMem VNS-HK VNS-J SS

266832945266812482266812482266855872266812482 20

0,008000,0160

175789668176114040176843237176960722175598606 30

0,1090,2940,7090,7760

126321403126106594126542821126689259124961056 40

0,1700,3460,462-0,909

98943049,59886223998876217,79893161498340138,6 50

-0,001-0,083-0,068-0,012-0,61

48962285,24872596348166635,648943300,847899112,7 100

2,6042,1090,9372,5640,376

31371538,931371538,930685399,731333000,830709692,4 150

2,6652,6650,4192,5390,499

22690957,922690957,922005595,922646784,822311420,1 200

3,5243,5240,3973,3221,792

17288153,917288153,916852031,417234369,517114643,3 250

3,7693,7691,1513,4462,727

13820368,713820368,713461917,413747375,313657457,4 300

3,4923,4920,8082,9462,272

11570674,911557842,111251477,711536793,311407670,3 350

4,264,1441,3843,9552,791

9787059,349787059,349577893,19765280,479710487,94 400

3,9883,9881,7663,7573,175

8491442,198491442,198355290,558488212,658373889,37 450

3,2223,2221,5673,1821,793

7408097,67408097,67311187,827377619,977338106,5 500

2,3922,3921,0521,971,424

Tables 1-3 yields the follow observations:

-The HybMem and Memetic-HK algorithms yield good solutions when the number of clusters, m, is low.However, the quality of their solutions deteriorates as the value of m increases.

-The VNS-J, and specially the VNS-HK algorithms become more ‘competitive’ than the other algorithms as the value of m increase. For high values of m (m≥ 50 in (i), m≥ 90 in (ii), m≥ 200 in (iii)), the VNS-HK algorithm yields the best solution out of all the strategies proposed in 14 cases.

-In 26 out of 41 cases, the SS algorithm yields the best solution.Furthermore, it is especially efficient with lower m values (m < 50 in (i), m < 90 in (ii), m < 200 in (iii)).In such cases, it always yields the best solution (except m = 150 in (iii)).

Besides in (ii) SS equals the earlier best known solution for m =10, 20, 30, and 70 (for m=40 no solution was known).For m = 50, 60, and 80 the deviations from the earlier best known solution are very small. In (iii) SS equals the earlier best known solution for m=10, 20, 30 and improves that solution for m = 40 and 50.

-For higher values of m, SS still proves to be very competitive, i.e., in six out of twenty cases it yields the best solution (m = 130 and 140).In the other fifteen instances, it holds second place, after the VNS-HK algorithm.Deviations in relation to the earlier best solutions are still low.

-Globally speaking, the VNS-HK and the SS algorithms are the best strategies in these tests.SS seems to be better, as it yields the best solution in most cases (26 compared to 22).

- Another interesting aspect is the robustness that SS shows in comparison to other

strategies. In (ii) no case is the best known solution yielded by SS further than

1.350%. VNS-HK goes up to

2.219%. The other strategies show greater distances:up to 5.5% for Memetic-HK, 5.086% for HybMem, and 4.291% for VNS-J.

Figure 3 shows the evolution of the solution found for the different algorithms,according to the computational time for N = 1060 and m

= 80.

129000000

130000000

131000000

132000000

133000000

134000000

135000000

136000000

137000000

0200400

Computational Time in Seconds

Figure 3.- Evolution of the solution value found for the different strategies according to

the computational time

As shown in figure 3, VNS-HK, and especially SS, have a very greedy character.After a few seconds, they yield solutions that are better than those produced by other strategies after using up the maximum computational time (600 seconds).

5.- Conclusions

The contribution of our work is the development of a specialized and sophisticated scatter search procedure for the solution of the cluster design problem. This contribution is important, because our diversification, improvement and combination methods introduce novel features that can be adapted to other situations. Our method brings together several search strategies and mechanisms, such as GRASP constructions, local search, tabu search and Path Relinking within the framework of scatter search.

Using instances from the literature, we were able to show the merit of our SS design.In particular, our experiments show that our method obtains the best overall solutions against others recent methods. Our method is specially effective for small values of m .With higher values of m it is only surpassed by the VNS algorithm proposed by Hansen and Mladenovic (2001), with HK-Means as the Local Search Procedure. However, the following is worth noting: in their work, VNS is used with J-Means and J-Means+ as a local search procedure, whereas in our work the use of J-Means in VNS leads to worse solutions than VNS with HK-Means and also to worse solutions than the methods we propose.

Finally our scatter search approach shows two important features: it is a ‘greedy’method (it found good solutions in very short calculation time) and is able to ‘evolve’(improve these solutions with more calculation time).

References

[1] Adenso-Díaz B and. Laguna M. Automated Fine Tuning of Algorithms with Taguchi Fractional

Experimental Designs and Local Search. University of Colorado at Boulder; 2001.

[2] Al-Sultan KH. A Tabu Search Approach to the Clustering Problem. Pattern Recognition 1995;28:

1443-1451.

[3] Babu GP and Murty MN. A Near-Optimal Initial Seed Value Selection in K-means Algorithm using

Genetic Algorithms. Pattern Recognition Letters 1993;14:763-769.

[4] Beltrán M and Pacheco J. Nuevos métodos para el dise?o de cluster no jerárquicos. Una aplicación a

los municipios de Castilla y León. Estadística Espa?ola. Instituto Nacional de Estadística 2001;43, 148:209-224. (available in www.ine.es)

[5] Brucker P. On the Complexity of Clustering Problems. Lecture Notes in Economics and

Mathematical Systems 1978;157:45-54.

[6] Campos V, Glover F, Laguna M and Martí R. An Experimental Evaluation of a Scatter Search for the

Linear Ordering Problem. Journal of Global Optimization 2001; 21:397-414.

[7] Diehr G. Evaluation of a Branch and Bound Algorithm for Clustering. SIAM https://www.sodocs.net/doc/0317946054.html,put.

1985; 6:268-284.

[8] du Merle, O., Hansen, P., Jaumard, B. and Mladenovic, N. An Interior Point Algorithm for Minimum

Sum of Squares Clustering. SIAM Journal on Scientific Computing 2000; 21(4):1485-1505.

[9] Feo, T.A. and Resende, M.G.C. A Probabilistic heuristic for a computationally difficult Set Covering

Problem. Operations Research Letters 1989; 8:67-71.

[10] Feo, T.A. and Resende, M.G.C. Greedy Randomized Adaptive Search Procedures. Journal of Global

Optimization 1995;2:1-27.

[11] Glover, F. A Template for Scatter Search and Path Relinking. in Artificial Evolution, Lecture Notes

in Computer Science, 1363, J.-K. Hao, E. Lutton, E. Ronald , M. Schoenauer and D. Snyers (Eds.) Springer 1998, pp.13-54.

[12] Glover, F., Laguna, M. and Martí, R. Fundamentals of Scatter Search and Path Relinking. Control

and Cybernetics 2000; 39,3:653-684.

[13] Hansen, P. and Mladenovic, N. J-Means: A new Local Search Heuristic for Minimum Sum-of-

Squares Clustering. Pattern Recognition 2001; 34(2):405-413.

[14] Howard, R. Classifying a Population into Homogeneous Groups. In Lawrence, J.R. (eds.),

Operational Research in the Social Sciences. Tavistock Publ., London 1966.

[15] Jancey, R.C. Multidimensional Group Analysis. Australian J. Botany 1966;14:127-130.

[16] Klein, R.W. and Dubes, R.C. Experiments in Projection and Clustering by Simulated Annealing.

Pattern Recognition 1989; 22: 213-220.

[17] Koontz, W.L.G., Narendra, P.M. and Fukunuga, K. A Branch and Bound Clustering Algorithm. IEEE

Transactions on Computers 1975; C-24:908-915

[18] Laguna, M. Scatter Search, in Handbook of Applied Optimization, P. M. Pardalos and M. G. C.

Resende (Eds.), Oxford University Press, New York 2002, pp. 183-193.

[19] Pacheco, J. and Valencia, O. Design of Hibrids for the Minimun Sum-of-Squares Clustering Problem.

Computational Statistics and Data Analysis 2003; 43,2:235-248.

[20] Pitsoulis, L.S. and Resende, M.G.C. Greedy Randomized Adaptive Search Procedures in Handbook

of Applied Optimization, P. M. Pardalos and M. G. C. Resende (Eds.), Oxford University Press 2002, pp. 168-182.

[21] Reinelt, G. TSPLIB: A Travelling Salesman Problem Library. ORSA Journal on Computing 1991; 3:

376-384.

[22] Sp?th, H. Cluster Analysis Algorithms for Data Reduction and Classification of Objects. Ellis

Horwood, Chichester 1980.

Source Insight用法精细

Source Insight实质上是一个支持多种开发语言(java,c ,c 等等) 的编辑器,只不过由于其查找、定位、彩色显示等功能的强大,常被我 们当成源代码阅读工具使用。 作为一个开放源代码的操作系统,Linux附带的源代码库使得广大爱好者有了一个广泛学习、深入钻研的机会,特别是Linux内核的组织极为复杂,同时,又不能像windows平台的程序一样,可以使用集成开发环境通过察看变量和函数,甚至设置断点、单步运行、调试等手段来弄清楚整个程序的组织结构,使得Linux内核源代码的阅读变得尤为困难。 当然Linux下的vim和emacs编辑程序并不是没有提供变量、函数搜索,彩色显示程序语句等功能。它们的功能是非常强大的。比如,vim和emacs就各自内嵌了一个标记程序,分别叫做ctag和etag,通过配置这两个程序,也可以实现功能强大的函数变量搜索功能,但是由于其配置复杂,linux附带的有关资料也不是很详细,而且,即使建立好标记库,要实现代码彩色显示功能,仍然需要进一步的配置(在另一片文章,我将会讲述如何配置这些功能),同时,对于大多数爱好者来说,可能还不能熟练使用vim和emacs那些功能比较强大的命令和快捷键。 为了方便的学习Linux源程序,我们不妨回到我们熟悉的window环境下,也算是“师以长夷以制夷”吧。但是在Window平台上,使用一些常见的集成开发环境,效果也不是很理想,比如难以将所有的文件加进去,查找速度缓慢,对于非Windows平台的函数不能彩色显示。于是笔者通过在互联网上搜索,终于找到了一个强大的源代码编辑器,它的卓越性能使得学习Linux内核源代码的难度大大降低,这便是Source Insight3.0,它是一个Windows平台下的共享软件,可以从https://www.sodocs.net/doc/0317946054.html,/上边下载30天试用版本。由于Source Insight是一个Windows平台的应用软件,所以首先要通过相应手段把Linux系统上的程序源代码弄到Windows平台下,这一点可以通过在linux平台上将 /usr/src目录下的文件拷贝到Windows平台的分区上,或者从网上光盘直接拷贝文件到Windows平台的分区来实现。 下面主要讲解如何使用Source Insight,考虑到阅读源程序的爱好者都有相当的软件使用水平,本文对于一些琐碎、人所共知的细节略过不提,仅介绍一些主要内容,以便大家能够很快熟练使用本软件,减少摸索的过程。 安装Source Insight并启动程序,可以进入图1界面。在工具条上有几个值得注意的地方,如图所示,图中内凹左边的是工程按钮,用于显示工程窗口的情况;右边的那个按钮按下去将会显示一个窗口,里边提供光标所在的函数体内对其他函数的调用图,通过点击该窗体里那些函数就可以进入该函数所在的地方。

STATA命令应用及详细解释(汇总情况)

STATA命令应用及详细解释(汇总) 调整变量格式: format x1 .3f ——将x1的列宽固定为10,小数点后取三位format x1 .3g ——将x1的列宽固定为10,有效数字取三位format x1 .3e ——将x1的列宽固定为10,采用科学计数法format x1 .3fc ——将x1的列宽固定为10,小数点后取三位,加入千分位分隔符 format x1 .3gc ——将x1的列宽固定为10,有效数字取三位,加入千分位分隔符 format x1 %-10.3gc ——将x1的列宽固定为10,有效数字取三位,加入千分位分隔符,加入“-”表示左对齐 合并数据: use "C:\Documents and Settings\xks\桌面\2006.dta", clear merge using "C:\Documents and Settings\xks\桌面\1999.dta" ——将1999和2006的数据按照样本(observation)排列的自然顺序合并起来 use "C:\Documents and Settings\xks\桌面\2006.dta", clear merge id using "C:\Documents and Settings\xks\桌面 \1999.dta" ,unique sort ——将1999和2006的数据按照唯一的(unique)变量id来合并,

在合并时对id进行排序(sort) 建议采用第一种方法。 对样本进行随机筛选: sample 50 在观测案例中随机选取50%的样本,其余删除 sample 50,count 在观测案例中随机选取50个样本,其余删除 查看与编辑数据: browse x1 x2 if x3>3 (按所列变量与条件打开数据查看器)edit x1 x2 if x3>3 (按所列变量与条件打开数据编辑器) 数据合并(merge)与扩展(append) merge表示样本量不变,但增加了一些新变量;append表示样本总量增加了,但变量数目不变。 one-to-one merge: 数据源自stata tutorial中的exampw1和exampw2 第一步:将exampw1按v001~v003这三个编码排序,并建立临时数据库tempw1 clear use "t:\statatut\exampw1.dta" su ——summarize的简写 sort v001 v002 v003 save tempw1

USB INF文件详解(USB)

INF文件详解 INF文件格式要求 一个INF文件是以段组织的简单的文本文件。一些段油系统定义(System-Defined)的名称,而另一些段由INF文件的编写者命名。每个段包含特定的条目和命名,这些命名用于引用INF文件其它地方定义的附加段。 INF文件的语法规则: 1、要求的内容:在特定的INF文件中所要求的必选段和可选段、条目及命令依赖于所要安装的设备组件。端点顺序可以是任意的,大多数的INF文件安装惯用的次序来安排各个段。 2、段名:INF文件的每个段从一个括在方括号[]中的段名开始。段名可以由系统定义或INF编写者定义 在Windows 2000中,段名的最大长度为255个字符。在Windows 98中,段名不应该超过28个字符。如果INF设计要在两个平台上运行,必须遵守最小的限制。段名、条目和命令不分大小写。在一个INF文件中如果有两个以上的段有相同的名字,系统将把其条目和命令合并成一个段。每个段以另一个新段的开始或文件的结束为结束。 3、使用串标记:在INF文件中的许多值,包括INF编写者定义的段名都可以标示成%strkey%形式的标记。每个这样的strkey必须在INF文件的Strings 段中定义为一系列显示可见字符组成的值。 4、行格式、续行及注释:段中的每个条目或命令以回车或换行符结束。在条目或命令中,“\”可以没用做一个显示的续行符;分好“;”标示后面的内容是注释;可以用都好“,”分隔条目和命令中提供的多个值。 INF文件举例 下面是一个完整的.inf文件,它是Windows 2000 DDK提供的USB批量阐述驱动程序范例中所附的.inf文件。 ; Installation inf for the Intel 82930 USB Bulk IO Test Board ; ; (c) Copyright 1999 Microsoft ; [Version] Signature="$CHICAGO$" Class=USB ClassGUID={36FC9E60-C465-11CF-8056-444553540000} provider=%MSFT% DriverVer=08/05/1999 [SourceDisksNames] 1="BulkUsb Installation Disk",,, [SourceDisksFiles] BULKUSB.sys = 1 BULKUSB.inf = 1

source insight解析命令行

安装完SI后,会在安装一个如下的文件 我的文档\Source Insight\c.tom c.tom的功能与C语言中的#define类似。打开这个文件,会看到有很多空格分割的字符串,SI在我们阅读代码时,自自动将空格前的字符串替换为空格后的字符串(仅仅是影响阅读,不影响编译喔)。 举两个例子。 #define AP_DECLARE(type) type AP_DECLARE(int) ap_calc_scoreboard_size(void) { .... } source insight 把AP_DECLARE当作了函数,当想查ap_calc_scoreboard_size的时候总是很麻烦,不能直接跳转. 我的文档\Source Insight\c.tom 加入 AP_DECLARE(type) type 如下的代码如何让SI 识别出f是一个函数? #define EXPORT_CALL(return,functionname) return functionname EXPORT_CALL (int, f1()) 我的文档\Source Insight\c.tom 加入 EXPORT_CALL(return,functionname) return functionname 同时,在#define中,标准只定义了#和##两种操作。#用来把参数转换成字符串,##则用来连接前后两个参数,把它们变成一个字符串。(c.tom的功能与支持##,不支持#好像) 这个技巧我在阅读zebra的命令行代码时也用到了。 比如下吗一段代码:(DEFUN是一个宏定义,这个文件中有很多这样的DEFUN。不修改c.tom 之前看到的是这样的)

carsim软件介绍

carsim软件介绍 CarSim是专门针对车辆动力学的仿真软件,CarSim模型在计算机上运行的速度比实时快3-6倍,可以仿真车辆对驾驶员,路面及空气动力学输入的响应,主要用来预测和仿真汽车整车的操纵稳定性、制动性、平顺性、动力性和经济性,同时被广泛地应用于现代汽车控制系统的开发。CarSim可以方便灵活的定义试验环境和试验过程,详细的定义整车各系统的特性参数和特性檔。CarSim软件的主要功能如下: n 适用于以下车型的建模仿真:轿车、轻型货车、轻型多用途运输车及SUV; n 可分析车辆的动力性、燃油经济性、操纵稳定性、制动性及平顺性; n 可以通过软件如MATLAB,Excel等进行绘图和分析; n 可以图形曲线及三维动画形式观察仿真的结果; n 包括图形化数据管理接口,车辆模型求解器,绘图工具,三维动画回放工具,功率谱分析模块; n 程序稳定可靠; n 软件可以实时的速度运行,支持硬件在环,C arSim软件可以扩展为CarSim RT, CarSim R T 是实时车辆模型,提供与一些硬件实时系统的接口,可联合进行HIL仿真; n 先进的事件处理技术,实现复杂工况的仿真; n 友好的图形用户接口,可快速方便实现建模仿真; n 提供多种车型的建模数据库; n 可实现用户自定义变量的仿真结果输出; n 可实现与simulink的相互调用; n 多种仿真工况的批运行功能; CarSim特点 1、使用方便 软件的所有组成部分都由一个图形用户接口来控制。用户通过点击“Run Math Model”来进行仿真。通过点击“Animate”按钮可以

以三维动画形式观察仿真的结果。点击“Plot”按钮可以察看仿真结果曲线。很短的时间内,你就可以掌握C arSim的基本使用方法,完成一次简单仿真并观察仿真结果。 所要设置或调整的特性参数都可以在图形接口上完成。150多个图形窗口使用户能够访问车辆的所有属性,控制输入,路面的几何形状,绘图及仿真设置。利用CarSim的数据库建立一个车辆模型并设置仿真工况,在很短的时间内即可完成。在数据库里有一系列的样例并允许用户建立各种组件、车辆及测试结果的库檔。这一功能使得用户能够迅速地在所做的不同仿真之间切换,对比仿真结果并作相应的修改。 车辆及其参数是利用各种测试手段所得到的数据和表格,包括实验测试及悬架设计软件的仿真测试等。CarSim为快速建立车辆模型提供了新的标准。 2、报告与演示 CarSim输出的资料可以导出并添加到报告、excel工作表格及Pow erPoint演示中。仿真的结果也可以很方便地导入到各种演示软件中。 3、快速 CarSim将整车数学模型与计算速度很好地结合在一起,车辆模型在主频为3GHz的PC机上能以十倍于实时的速度运行。速度使得CarSim很容易支持硬件在环(HIL)或软件在环(SIL)所进行的实时仿真。CarSim支持Applied Dynamics Internatinal(A DI), A&D, dSPACE,ETAS,Opal-R T及其它实时仿真系统。CarSim这一快速特性也使得它可以应用于优化及试验设计等。 4、精度及验证 CarSim建立在对车辆特性几十年的研究基础之上,通过数学模型来表现车辆的特性。每当加入新的内容时,都有相应的实验来验证。使用CarSim的汽车制造商及供货商提供了很多关于实验结果与CarSim仿真结果一致性的报告。 5、标准化及可扩展性 CarSim可以在一般的Windows系统及便携式计算机上运行。CarSim也可以在用于实时系统的计算机上运行。数学模型的运动关系式已经标准化并能和用户扩展的控制器,测试设备,及子系统协调工作。这些模型有以下三种形式: n Carsim函数自带的内嵌模块。 n 嵌入模型的MATLAB/Simulink S-函数 n 具有为生成单独EXE檔的可扩展C代码的库檔 6、有效、稳定、可靠 CarSim包括了车辆动力学仿真及观察结果所需的所有工具。MSC利用先进的代码自动生成器来生成稳定可靠的仿真程序,这比传统的手工编码方式进行软件开发要快很多。 需要进一步了解的朋友们可以加我QQ哦12603839

完整word版,stata命令大全(全),推荐文档

*********面板数据计量分析与软件实现********* 说明:以下do文件相当一部分内容来自于中山大学连玉君STATA教程,感谢他的贡献。本人做了一定的修改与筛选。 *----------面板数据模型 * 1.静态面板模型:FE 和RE * 2.模型选择:FE vs POLS, RE vs POLS, FE vs RE (pols混合最小二乘估计) * 3.异方差、序列相关和截面相关检验 * 4.动态面板模型(DID-GMM,SYS-GMM) * 5.面板随机前沿模型 * 6.面板协整分析(FMOLS,DOLS) *** 说明:1-5均用STATA软件实现, 6用GAUSS软件实现。 * 生产效率分析(尤其指TFP):数据包络分析(DEA)与随机前沿分析(SFA) *** 说明:DEA由DEAP2.1软件实现,SFA由Frontier4.1实现,尤其后者,侧重于比较C-D与Translog 生产函数,一步法与两步法的区别。常应用于地区经济差异、FDI溢出效应(Spillovers Effect)、工业行业效率状况等。 * 空间计量分析:SLM模型与SEM模型 *说明:STATA与Matlab结合使用。常应用于空间溢出效应(R&D)、财政分权、地方政府公共行为等。 * --------------------------------- * --------一、常用的数据处理与作图----------- * --------------------------------- * 指定面板格式 xtset id year (id为截面名称,year为时间名称) xtdes /*数据特征*/ xtsum logy h /*数据统计特征*/ sum logy h /*数据统计特征*/ *添加标签或更改变量名 label var h "人力资本" rename h hum *排序 sort id year /*是以STATA面板数据格式出现*/ sort year id /*是以DEA格式出现*/ *删除个别年份或省份 drop if year<1992 drop if id==2 /*注意用==*/

ARM——分散加载描述文件

ARM——分散加载描述文件 分散加载的实现(scatter) 很多朋友对分散加载不是很理解,其实它的原来很简单,这些加载的原理都源自生活。 由于现在的嵌入式技术发展比较快,各类存储器也层出不穷,但是它们在容量、成本和速度上有所差异,嵌入式系统又对成本比较敏感,那么合理的选择存储器和充分的利用存储器资源成为一个必要解决的问题。咋们工程师最喜欢的就是发掘问题,然后解决问题,基于嵌入式系统对存储器的敏感,那么要合理的利用存储器资源,就必须找到一种合理的方式。工程师们发现,可以把运行的程序放在不同成本的存储器中来寻找这个成本的支点,比如把没有运行的但是较为庞大的程序放在容量大、成本低、速度也较低的FLASH存储器中,要用的时候再去拿。但是,这里面又有一个问题,嵌入式本身就对信号的处理速度有较高的要求,这点在实时操作系统的定义上上有所体现。所以那些经常要用的程序段如果要保证其高速的运行那么就得放在一个在高速的存储器中,不过这是有代价的:较高成本,小容量。但是,相信由于技术的发展这个问题终将被解决,到时候寻找平衡点的问题也就不存在了。好了,说了多了点。切入正题。 程序总有两种状态:运行态和静止态。当系统掉电的时候程序需要被保存在非易失性的存储器中,且这个时候程序的排放是按照地址依次放的,换句话说:我才懒得管它怎么放,只要不掉就行。当系统上电后,CPU就要跑起来了,CPU 属于高速器件,存储器总是不怎么能跟得上,既然跟不上那么我们就尽量缩短它们之间的差距,那留下一条路,那就是尽量提高存储器的读取速度,存储器类型决定其速度的水平,那么尽量放在速度高的存储器就成为首选解决方案。那么我们就把要执行的程序暂时拿到速度较快的RAM中。那么拿的过程就牵涉到程序的加载了。这就是要解决的问题。 一个映像文件由域(region)、输出段(output sections)和输入段(input sections)组成。不要想得太复杂,其实他们之间就是包含与被包好的关系。具体关系是这样的: 映像文件>域>输出段>输入段 输入段: 输入段就是我们写的代码+初始化的数据+应该被初始化为0的数据+没有初始化的数据,用英文表示一下就是:RO(ReadOnly),RW (ReadWrite),ZI (ZeroInitialized),NOINIT(Not Initialized)。ARM连接器根据各个输入段不同的属性把相同的拿再一起组合一下就成为了输出段。 请看看平时写的东东: AREA RESET, CODE, READONLY AREA DSEG1, DATA, READWRITE AREA HEAP, NOINIT, READWRITE 看出其属性没? 输出段: 为了简化编译过程和更容易取得各种段的地址,那么把多个同属性的输入段按照一定的规律组合在一起,当然这个输出段的属性就和它包含的输入段的属性

source命令与“.”点命令

source命令与“.”点命令 source 命令是bash shell 的内置命令,从C Shell 而来。 source 命令的另一种写法是点符号,用法和source 相同,从Bourne Shell而来。 source 命令可以强行让一个脚本去立即影响当前的环境。 source 命令会强制执行脚本中的全部命令,而忽略文件的权限。 source 命令通常用于重新执行刚修改的初始化文件,如.bash_profile 和.profile 等等。source 命令可以影响执行脚本的父shell的环境,而export 则只能影响其子shell的环境。 使用方法举例: $source ~/.bashrc 或者: $. ~/.bashrc 执行后~/.bashrc 中的内容立即生效。 一个典型的用处是,在使用Android 的mm 等相关命令时,需要先执行以下命令:$cd

ucinet软件解释对照

FILES文件: change default folder改变默认文件夹 create new folder创造新文件夹 copy Ucinet dataset复制UCINET数据集 rename ucinet dataset重命名ucinet delete ucinet dateset删除ucinet print setup打印设置 text editor文档编辑程序 view previous output查看前一个输出 launch mage启动mage launch pajek启动pajet exit退出 DA TA数据: Spreadsheets:matrix 电子表格:矩阵 Random:sociometric/bernoulli/multinomial 随机:计量社会学/伯努利分布/多项分布Import:DL/multiple DL files/VNA/pajek/krackplot/negopy/raw/excel matrix 输入export: DL/multiple DL files/VNA/pajek/krackplot/negopy/raw/excel matrix 输出 css Browse 浏览 Display 显示 Describe 描述 Extract 解压缩 Remove 移动 Unpack 解包 Join 加入 Sort 排序 Permute 交换 Transpose 调换 Match net and attrib datasets 匹配网和属性数据集 Match multiple datasets 匹配多重数据集 Attribute to matrix 属性到矩阵 Affiliations(2-mode to 1-mode) 联系2模到1模 Subgraphs from partitions 子图分割 Partitions to sets 集合分割 Create node sets 创造节点设置 Reshape 变形 TRANSFORM变换: Block 块 Collapse 塌缩 Dichotomize 对分 Symmetrize 对称

Carsim整车建模的参数

车体空载情况下的车体信息 (1 )簧上质量的质心距前轴的距离mm (2 )簧上质量质心距地面的高度mm (3 ) 轴距mm (4 ) 质心的横向偏移量mm (5 )簧载质量kg (6 )对x 轴的极惯性矩( lxx ) kg-m2 (7)对y 轴的极惯性矩( lyy ) kg-m2 (8 )对z 轴的极惯性矩( lzz ) kg-m2 (9) 对x、y 轴的惯性积( lxy )kg-m2 (10) 对x、z 轴的惯性积( lxz )kg-m2 (11) 对y、z 轴的惯性积( lyz )kg-m2 二空气动力学 (1) 空气动力学参考点X mm (2) 空气动力学参考点Y mm (3) 空气动力学参考点Z mm (4 ) 迎风面积m2 (5 )空气动力学参考长度mm (6 )空气密度kg/m3

(7 )CFx(空气动力学系数)与slip angle ( 行车速度方向与空气流动 方向的夹角) 的关系 (8) CFy 与slip angle的关系 (9) CFz 与slip angle的关系 (10) CMx与slip angle 的关系 (11) CMy与slip angle 的关系 (12) CMz与slip angle 的关系 三传动系 1 最简单的一种 (1) 后轮驱动所占的比值,为1时,后轮驱动;为0 时,前轮驱动 (2 )发动机的功率KW 2 前轮驱动或后轮驱动 1)发动机特性 (1 )各个节气门位置下,发动机扭矩(N-m)与发动机转速 (rpm) 的 关系 (2 )打开节气门的时间迟滞sec

(3 ) 关闭节气门的时间迟滞sec (4 ) 曲轴的旋转惯量kg-m2 (5 ) 怠速时发动机的转速rpm 2)离合器特性 a 液力变矩器 (1) 扭矩比(输出比输入)与速度比(输出比输入)的关系 (2) 液力变矩器的参 数1/K 与速度比(输出比输入)的关系 (3) 输入轴的转动惯 量kg-m2 (4) 输出轴的转动惯 量kg-m2 b 机械式离合器 (1 )输出的最大扭矩(N-m)与离合器接合程度 (0代表完全结合, 1 代表完全分离)的关系 (2 )接合时间迟滞sec (3 )分离时间迟滞sec (4 )输入轴的转动惯量kg-m2 (5 )输出轴的转动惯量kg-m2 3)变速器(1 )正向挡位和倒挡的传动比,转动惯量(kg-m2),正向传动与反向

ScatterFile分散加载文件及其应用

分散加载文件及其应用 分散加载能够将加载和运行时存储器中的代码和数据描述在被称为分散加载描述文件的一个文本描述文件中,以供连接时使用。 (1)分散加载区 分散加载区域分为两类: ? 加载区,包含应用程序复位和加载时的代码和数据。 ? 执行区,包含应用程序执行时的代码和数据。应用程序启动过程中,从每个加载区可创建一个或多个执行区。 映象中所有的代码和数据准确地分为一个加载区和一个执行区。 (2)分散加载文件示例 ROM_LOAD 0x0000 0x4000 { ROM_EXEC 0x0000 0x4000; Root region { * (+RO); All code and constant data } RAM 0x10000 0x8000 { * (+RW, +ZI); All non-constant data } }

(3)分散加载文件语法 load_region_name start_address | "+"offset [attributes] [max_size] { execution_region_name start_address | "+"offset [attributes][max_size] { module_select_pattern ["(" ("+" input_section_attr | input_section_pattern) ([","] "+" input_section_attr | "," input_section_pattern)) * ")"] } } load_region:加载区,用来保存永久性数据(程序和只读变量)的区域;execution_region:执行区,程序执行时,从加载区域将数据复制到相应执行区后才能被正确执行; load_region_name:加载区域名,用于“Linker”区别不同的加载区域,最多31个字符;start_address:起始地址,指示区域的首地址; +offset:前一个加载区域尾地址+offset 做为当前的起始地址,且“offset”应为“0”或“4”的倍数; attributes:区域属性,可设置如下属性: PI 与地址无关方式存放; RELOC 重新部署,保留定位信息,以便重新定位该段到新的执行区; OVERLAY 覆盖,允许多个可执行区域在同一个地址,ADS不支持; ABSOLUTE 绝对地址(默认); max_size:该区域的大小; execution_region_name:执行区域名; start_address:该执行区的首地址,必须字对齐; +offset:同上; attributes:同上; PI 与地址无关,该区域的代码可任意移动后执行; OVERLAY 覆盖; ABSOLUTE 绝对地址(默认); FIXED 固定地址; UNINIT 不用初始化该区域的ZI段; module_select_pattern:目标文件滤波器,支持通配符“*”和“?”; *.o匹配所有目标,* (或“.ANY”)匹配所有目标文件和库。 input_section_attr:每个input_section_attr必须跟随在“+”后;且大小写不敏感; RO-CODE 或CODE RO-DATA 或CONST RO或TEXT, selects both RO-CODE and RO-DATA RW-DATA RW-CODE RW 或DATA, selects both RW-CODE and RW-DATA ZI 或BSS

source Insight工程的说明

Source Insight工程的说明 说明:source insight 是我们在工作中最常用到的软件,它对我们查找函数,变量,修改代码起到了不可估量的作用。熟练运用source insight可以提高工作效率,了解整个工程的架构是很有帮助的。 一source Insight工程的建立步骤 1、打开source insight 如下图所示:

2、工程目录的旁边建立一个文件夹,名字为code_s(文件夹名字自己可以为任意,最好能够区 分工程)。 3、在source insight里面,状态栏上,根据如下顺序,建立工程project- new project,点击后如 下图所示:

在上图中,new project name : 创建你要建立的source insight 工程的名字。例如我们创建为M53_code. Where do you want to stor the project data files? 是我们要将创建的工程文件放入的路径是什么?我们选择刚才我们创建的code_s文件夹的路径。最后如图所示。 4、按OK后,进入如下图所示的界面。 在这个界面里,所有的选项我们都默认,但是选择工程路径是我们需要建立的工程路径。例如我们现在要建立的工程路径是F:\M53\code那么,我们就要选择我们的工程路径。如图下图所示

5、按OK,进入下一个窗口。如图所示。 我们选择,后,如下图所示, 我们在前面全部选择,然后按OK。进行搜索源工程文件,搜索完成后,如图所示。 工程不同,文件的数量不同。这里有7472个文件。我们按确定后,按close,关闭上述窗口。工程建立成功。 二source insight 工程与源工程同步 在上述,工程建立之后,由于我们需要在source insight修改的时候,修改源工程,那么我们需

vfp命令

? 在下一行显示表达式串 ?? 在当前行显示表达式串 @... 将数据按用户设定的格式显示在屏幕上或在打印机上打印 ACCEPT 把一个字符串赋给内存变量 APPEND 给数据库文件追加记录 APPEND FROM 从其它库文件将记录添加到数据库文件中 AVERAGE 计算数值表达式的算术平均值 BROWSE 全屏幕显示和编辑数据库记录 CALL 运行内存中的二进制文件 CANCEL 终止程序执行,返回圆点提示符 CASE 在多重选择语句中,指定一个条件 CHANGE 对数据库中的指定字段和记录进行编辑 CLEAR 清洁屏幕,将光标移动到屏幕左上角 CLEAR ALL 关闭所有打开的文件,释放所有内存变量,选择1号工作区 CLEAR FIELDS 清除用SET FIELDS TO命令建立的字段名表 CLEAR GETS 从全屏幕READ中释放任何当前GET语句的变量 CLEAR MEMORY 清除当前所有内存变量 CLEAR PROGRAM 清除程序缓冲区 CLEAR TYPEAHEAD 清除键盘缓冲区 CLOSE 关闭指定类型文件 CONTINUE 把记录指针指到下一个满足LOCATE命令给定条件的记录,在LOCATE命令后出现。无LOCATE则出错 COPY TO 将使用的数据库文件复制另一个库文件或文本文件 COPY FILE 复制任何类型的文件 COPY STRUCTURE EXTENED TO 当前库文件的结构作为记录,建立一个新的库文件 COPY STRUCTURE TO 将正在使用的库文件的结构复制到目的库文件中 COUNT 计算给定范围内指定记录的个数 CREATE 定义一个新数据库文件结构并将其登记到目录中 CREATE FROM 根据库结构文件建立一个新的库文件 CREATE LABEL 建立并编辑一个标签格式文件 CREATE REPORT 建立宾编辑一个报表格式文件 DELETE 给指定的记录加上删除标记 DELETE FILE 删除一个未打开的文件

Carsim整车建模参数

Carsim整车建模参数 一车体 空载情况下的车体信息 (1) 簧上质量的质心距前轴的距离mm (2) 簧上质量质心距地面的高度mm (3) 轴距mm (4) 质心的横向偏移量mm (5) 簧载质量kg (6) 对x轴的极惯性矩(lxx)kg-m2 (7) 对y轴的极惯性矩(lyy)kg-m2 (8) 对z轴的极惯性矩(lzz)kg-m2 (9) 对x、y轴的惯性积(lxy)kg-m2 (10) 对x、z轴的惯性积(lxz)kg-m2 (11) 对y、z轴的惯性积(lyz)kg-m2 二空气动力学 (1) 空气动力学参考点X mm (2) 空气动力学参考点Y mm (3) 空气动力学参考点Z mm (4) 迎风面积 m2 1 (5) 空气动力学参考长度 mm (6) 空气密度 kg/m3 (7) CFx(空气动力学系数)与slip angle (行车速度方向与空气流 动方向的夹角)的关系 (8) CFy与slip angle的关系 (9) CFz与slip angle的关系 (10) CMx与slip angle的关系

(11) CMy与slip angle的关系 (12) CMz与slip angle的关系 三传动系 1 最简单的一种 (1) 后轮驱动所占的比值,为1时,后轮驱动;为0时,前轮驱动 (2) 发动机的功率KW 2 前轮驱动或后轮驱动 1)发动机特性 (1) 各个节气门位置下,发动机扭矩(N-m)与发动机转速(rpm) 的 2 关系 (2) 打开节气门的时间迟滞sec (3) 关闭节气门的时间迟滞sec (4) 曲轴的旋转惯量kg-m2 (5) 怠速时发动机的转速rpm 2)离合器特性 a 液力变矩器 (1) 扭矩比(输出比输入)与速度比(输出比输入)的关系 (2) 液力变矩器的参数1/K与速度比(输出比输入)的关系 (3) 输入轴的转动惯量kg-m2 (4) 输出轴的转动惯量kg-m2 b 机械式离合器 (1) 输出的最大扭矩(N-m)与离合器接合程度(0代表完全结合, 1代表完全分离)的关系 (2) 接合时间迟滞sec

WinCE中sources文件中targetlibs与sourcelibs的作用与区别

[原创] WinCE中sources文件中targetlibs与sourcelibs的作用与区别[复制链接] wwfiney 当前离 线 最后登录2011-12-23 在线时间488 小时权威 71 金币 1455 注册时间2009-2-9 阅读权限200 帖子 1286 精华 4 积分 1707 UID 86 管理员 权威 71 金币 1455 阅读权限200 积分 1707 精华 4 电梯直达 主题贴 wwfiney 发表于2009-2-11 17:23:04 |只看该作者|倒序浏览 在WinCE里面,编译和链接的必备文件sources,做过WinCE BSP开发的一定都很熟悉,其中有2个关键字,targetlibs和sourcelibs,一直让我对其中的区别很感兴趣,故查阅了一些资料,与大家分享。 其实只要搜索以下就会得到一些基本的答案,比如: TARGETLIBS,如果一个库以DLL的形式提供给调用者,就需要用TARGETLIBS,它只链接一个函数地址,系统执行时会将被链接的库加载。比如coredll.lib就是这样的库文件。即动态链接。 SOURCELIBS,将库中的函数实体链接进来。即静态链接,用到的函数会在我们的文件中形成一份拷贝。 这个答案已经基本解决问题了,但是这个答案让我们能看到更深入的东西: This is componentization feature of Windows CE. The link has two steps. First, whatever is in SOURCELIBS gets combined in a signle library yourproductname_ALL.lib. In the second step, executable module is linked from that library and all the targetlibs. This is done to allow stubs to be conditionally linked: if the function is defined into your source already, stubs get excluded. If it is not there, stubbed version (returning ERROR_NOT_IMPLEMENTED or something to that effect) gets linked in instead. If the link were to be performed in just one step, it would be impossible to predict which version (real or stub) would get included. As it is, implemented functions have a priority over stubs. -- Sergey Solyanik Windows CE Core OS 总的来说就是先编译了你自己在sources里指定的源文件,在链接阶段,先将所有的sourcelibs链接在一起成为一个lib,然后与targetlibs指定的lib一起参与链接。 当然这里targetlibs指定的可以是dll的lib文件,在CE的帮助文件中,

CarSim_Simulink

Simulink接口 (1)变量由Simulink导入CarSim(导入变量) 可由Simulink导入到CarSim中的变量可达160多个,主要分为以下 几部分: 控制输入 轮胎/路面输入 轮胎的力和力矩 弹簧及阻尼力 转向系统的角度 传动系的力矩 制动力矩及制动压力 风的输入 任意的力和力矩 我们可以在Simulink中定义变量,也可以在其他软件中定义并导入Simulink模型中,导入的变量将叠加到CarSim内部相应的变量中。

Simulink接口 2)变量由CarSim导入Simulink(导出变量) 导出变量可以应用于用户自定义的Simulink模型,CarSim的导 出变量多达560之多,如车辆的位置、姿态、运动变量等。 CarSim导出变量分类

Simulink接口 下图为CarSim软件所提供的一个CarSim与Simulink联合仿真的例子 简单驾驶员模型

CarSim与Simulink联合仿真 以CarSim中所提供的与Simulink联合仿真的一个例子为例(稍 有修改),来介绍CarSim与Simulink联合仿真的整个过程,例如车型B-class,Hatchback:No ABS 初始车速65km/h 节气门开度0 档位控制闭环四档模式 制动2s后紧急制动 方向盘转角0deg 路面对开路面 仿真时间10s 仿真步长0.001s 说明:选用同一车型的两辆汽车,同样的仿真工况,但其中一辆加入在 Simulink中建立的ABS控制器,相当于一辆汽车带有ABS,而另一辆汽车没有带ABS,方便对比。

CarSim与Simulink联合仿真 (1)双击桌面上CarSim的图标,运行CarSim,这 里选用是的CarSim8.0版本; (2)出现‘选择数据库’对话框,如下图所示,选择好数 据库文件夹后点击‘Continue with the selected database’,若想要不再出现此对话框,可以将左下角 ‘Don't show this window the next time you start’选中;

(完整版)Stata学习笔记和国贸理论总结

Stata学习笔记 一、认识数据 (一)向stata中导入txt、csv格式的数据 1.这两种数据可以用文本文档打开,新建记事本,然后将相应文档拖入记事本即可打开数据,复制 2.按下stata中的edit按钮,右键选择paste special 3.*.xls/*.xlsx数据仅能用Excel打开,不可用记事本打开,打开后会出现乱码,也不要保存,否则就恢复不了。逗号分隔的数据常为csv数据。 (二)网页数据 网页上的表格只要能选中的,都能复制到excel中;网页数据的下载可以通过百度“国家数据”进行搜索、下载 二、Do-file 和log文件 打开stata后,第一步就要do-file,记录步骤和历史记录,方便日后查看。Stata处理中保留的三种文件:原始数据(*.dta),记录处理步骤(*.do),以及处理的历史记录(*.smcl)。 三、导入Stata Stata不识别带有中文的变量,如果导入的数据第一行有中文就没法导入。但是对于列来说不会出现这个问题,不分析即可(Stata不分析字符串,红色文本显示;被分析的数据,黑色显示);第一行是英文变量名,选择“Treat first row as variable names” 在导入新数据的时候,需要清空原有数据,clear命令。 导入空格分隔数据:复制——Stata中选择edit按钮或输入相应命令——右键选择paste special——并选择,确定;导入Excel中数据,复制粘贴即可;逗号分隔数据,选择paste special后点击comma,然后确定。 Stata数据格式为*.dta,导入后统一使用此格式。 四、基本操作(几个命令) (一)use auto,clear 。在清空原有数据的同时,导入新的auto数据。 (二)browse 。浏览数据。

相关文档

- 第二讲stata画图和线性回归基础共25页文档

- scatter文件的写法

- Scatter分散加载文件详解

- 基于Eclipse插件的scatter文件编辑器设计与开发

- 第二讲stata画图和线性回归基础共24页文档

- SP Flash Tool使用文档

- ScatterFile分散加载文件及其应用

- arm scatter loading的剖析及应用

- MTK Scatter文件学习

- scatter分析

- 线刷包修改oppo r831t分区---MTK-有Android_scatter文件通用-用SP-flash-tool工具刷入

- DMA(直接内存访问)技术说明文档

- ARM scatter文件以及boot的一点收获

- scatter文件详解

- 国人原创Houdini插件-Muitiscatter-Demo版发布

- scatter file翻译

- stata命令大全(全),推荐文档

- Scatter file详述

- map文件代码内容详细解释

- ARM——分散加载描述文件