hotcloud14-chen_qi

Building a Scalable Multimedia Search Engine Using In?niband

Qi Chen1,Yisheng Liao2,Christopher Mitchell2,Jinyang Li2,and Zhen Xiao1 1Department of Computer Science,Peking University

2Department of Computer Science,New York University

Abstract

The approach of vertically partitioning the index has long been considered as impractical for building a distributed search engine due to its high communication cost.With the recent surge of interest in using High Performance Computing networks such as In?niband in the data cen-ter,we argue that vertical partitioning is not only prac-tical but also highly scalable.To demonstrate our point, we built a distributed image search engine(VertiCut)that performs multi-round approximate neighbor searches to ?nd similar images in a large image collection.

1Introduction

With the explosion of multimedia information on the Web,there is an increasing demand to build bigger and faster search engines to index such data.Inevitably,such a scalable search engine must be distributed in order to leverage the aggregate memory and CPU resources of many machines.

Distributed search has long been an open challenge. The traditional approach is to horizontally partition the index such that each machine stores a subset of all doc-uments and maintains a corresponding local in-memory index.To process a request,the search engine?rst dis-patches the query to all machines each of which performs a search locally.It then aggregates the partial results from all machines before returning the?nal answer to the user.Although this scheme can use the aggregate memory of many machines,it does not have scalable per-formance:as each request is processed by all machines, query latency and performance do not improve as more machines are added.

A promising alternative is vertical partitioning.In this scheme,the index of the entire document collection is cut vertically such that each machine stores a subset of the indexed features.To process a request,the search engine needs to fetch multiple indexed features(each of which is located on a different machine)and then?lter or join them locally to obtain the?nal results.This scheme is scalable:since the number of features being looked up is independent of the number of machines,one can poten-tially improve performance by adding more machines. Despite its promise for scalability,vertical partition-ing has long been considered impractical[9].This is because multimedia search engines need to sift through tens of thousands of indexed features,resulting in huge communication cost per query.Optimizations that re-duce communication signi?cantly increase the number of roundtrips during the search and hence are not practical when running on top of the Ethernet where a roundtrip is around~0.1ms.As a result,existing distributed search engines are almost always horizontally partitioned[3]. In this paper,we argue that now is time to adopt ver-tical partitioning for distributed search.This revolution is made possible by recent technological trends in dat-acenter networks that aim to incorporate High Perfor-mance Computing(HPC)network features such as ultra-low latency[1,14].With a roundtrip latency of several microseconds,a vertically-partitioned search engine can potentially issue tens of thousands of lookups sequen-tially to re?ne its results while still achieving sub-second query latency.

We demonstrate the practicality of vertical partitioning by building VertiCut,an image search engine running on top of In?niband,a popular HPC network.VertiCut im-plements a distributed version of the multi-index hash-ing algorithm[15]which performs K-Nearest-Neighbor (KNN)search in a high-dimensional binary space oc-cupied by all images.VertiCut uses a distributed hash table to(vertically)partition the indexed binary codes. To process a request quickly,VertiCut also uses two cru-cial optimizations.First,it performs approximate KNN search by issuing hash table reads sequentially and stop-ping early as soon as enough“good”results are found. This optimization drastically reduces the amount of hash table reads done by each query.Second,VertiCut elim-

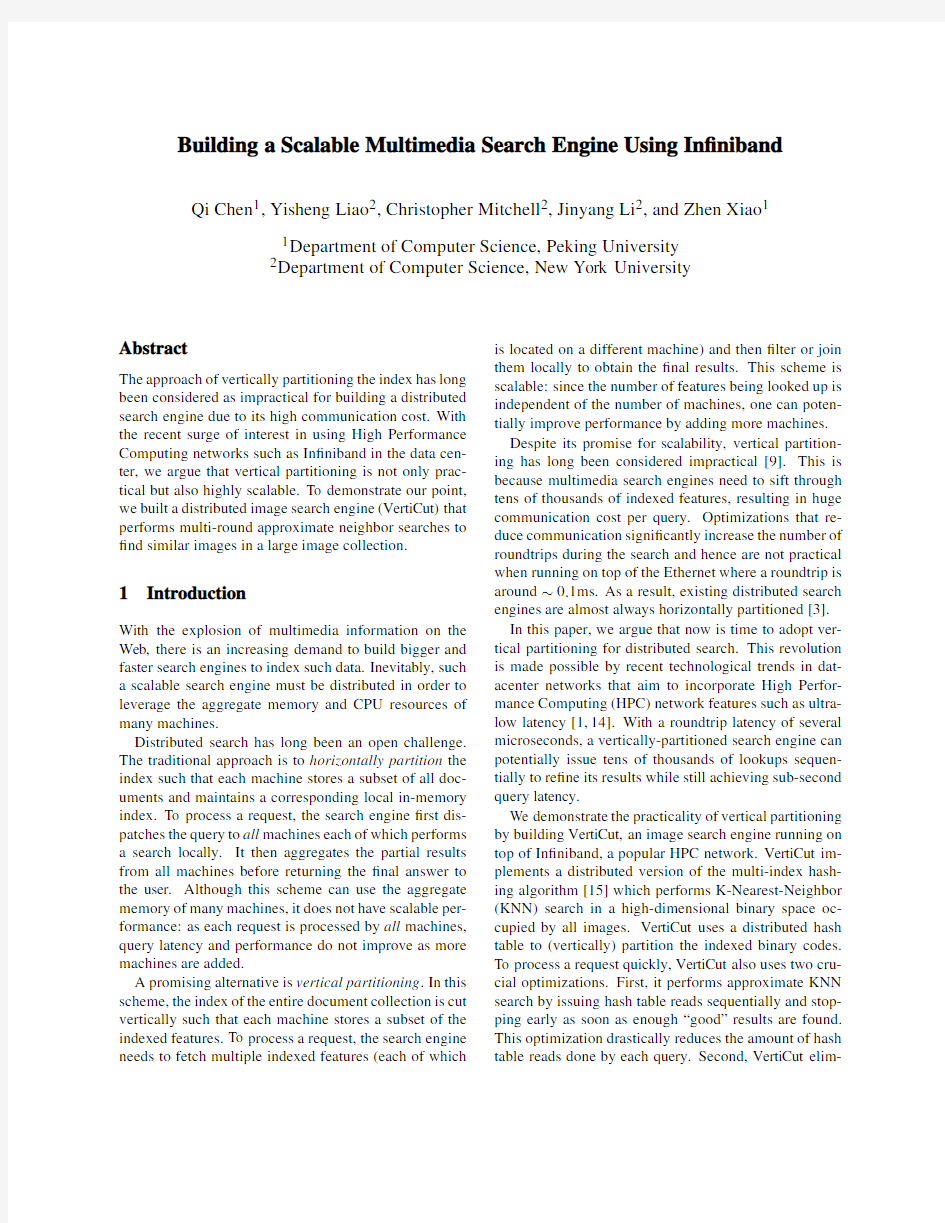

Figure1:The indexing process of the multimedia search

inates a huge number of lookups for non-existant keys by keeping a local bitmap at each machines.Our experi-ments show that VertiCut achieves better and more scal-able performance compared to a horizontally partitioned search engine.Furthermore,VertiCut’s KNN approxi-mation has very little impact on the quality of the search results.

2Challenges of Distributed Multimedia Search

Background on Multimedia Search:To search multi-media?les such as images,music and video,a common approach is based on mapping multimedia data to binary codes.Under this approach,an of?ine indexer?rst ex-tracts an array of features using some domain speci?c algorithms(https://www.sodocs.net/doc/1d8152339.html,ing SIFT[11]descriptors for images) for each?le.It then maps each high-dimensional feature descriptor into a compact binary code using a transfor-mation function that preserves semantic similarity such as a locality sensitive hash function[6].The common length of the binary code is128bits since128-bit binary code can achieve more accurate result according to[15]. The indexer then builds an index out of the collection of binary codes.Figure1illustrates the indexing pro-cess.To search for images that are similar to a given query image,the search engine?nds its k nearest neigh-bors(KNN)in terms of Hamming distance among the collection of binary codes.

There are many ways to perform KNN in the binary code space.The recently proposed multi-index hash-ing algorithm,MIH[15],provides a particularly ef?cient way to index and search these binary codes.Speci?cally, it divides each binary code into m disjoint pieces and in-dexes each part into a separate hash table(shown in Fig-ure2).

To perform k nearest neighbor search,the MIH algo-rithm?rst divides the query binary code Q into m pieces and then searches each piece Q i using the corresponding i-th hash table.Suppose the length of the binary code is s.The algorithm performs search in rounds with in-creasing search radius r starting from0.In a round that handles a speci?c search radius r,the algorithm does the following steps:

Figure2:Multiple index hash tables ?For each hash table i,enumerate all r s

Figure3:System Architecture

search engine does the KNN search in the same way as the MIH algorithm.This scheme has constant lookup cost regardless of the number of machines n.Better yet, we expect it to perform fewer lookups per query as the underlying image collection gets bigger.This is because the more images in each entry of the hash tables,the smaller the search radius r is required to?nd k closeby neighbors.The main challenge with this approach is its large communication cost since there is a huge number of binary codes to be enumerated and checked with even a modest search radius r.

3Design of VertiCut

In this section,we present a fast and scalable multime-dia search engine VertiCut that leverages the features of In?niband to address the distribution challenge.

Basic Design:Figure3shows the system architecture of VertiCut.It contains two layers:search layer and stor-age layer.In search layer,each node starts multiple pro-cesses to deal with the user request in parallel.In storage layer,we run the fast in-memory storage Pilaf[14]on each server which uses the RDMA read interface of In-?niband and organize the memory of the servers into a transparent DHT.

Before VertiCut can answer queries,we?rst build up multiple index hash tables for the whole data collection. We vertically cut the binary codes into multiple disjoint small parts(m parts)with each part consisting no more than32bits and build an index hash table for each part of the codes(just like the MIH algorithm does).Then in-stead of storing different hash tables to different servers, we store these hash tables into our fast distributed in-memory storage(each entry in each hash table generates an entry in our DHT).

When a query binary code Q arrives,the search node divides Q into m parts and starts m processes,with each process searching one index hash table using our simpli-?ed In?niband“get”interface.A master process takes responsibility for performing search iteratively with in-creasing search radius r,controlling each process to do the search in parallel and aggregating the results from all the processes.When there are more than k candidates whose Hamming distance with Q is less than the mini-mum Hamming distance in the next iteration of search radius(r+1),the master process stops the search proce-dure and returns the top k items to the user.

The naive MIH algorithm is not practical due to its huge communication cost which increases explosively with the search radius r.Therefore,we introduce some optimizations to cut down this cost.

Optimization I:Approximate nearest neighbor.In the MIH algorithm,in order to get the exact k nearest re-sults,for each search radius r,we need to check whether there are more than k candidates within a Hamming dis-tance of(r+1)?m after aggregating the results from all the processes.This may cause the search radius r to be-come large in some cases although there have already been nearly k exact nearest neighbors in the candidate set.We notice that the larger the search radius r is,the faster the search cost grows.Since we are not very strict with the exact k nearest search results,we propose an approximate search algorithm to further reduce the syn-chronization cost and search radius r while preserve an acceptable precision at the same time.The optimiza-tion we make is to change the search stop condition to |Candidates|>=Factor Approx?k.This intuitive opti-mization can greatly reduce the search cost con?rmed by our evaluation results.

The trade off is that it may miss some exact search results.For example,suppose we have a full candidate set A(|A|=Factor Approx?k),an item b and the search item Q.All candidates in A have some substring whose Hamming distance with the corresponding substring of Q is zero,while other parts of them are far away from Q.

b has a small Hamming distance(e.g.1)with Q in all of its substrings.Then we miss the item b whose Ham-ming distance to Q is closer than that of candidates in A. Therefore,we should carefully choose the Factor Approx so that we can achieve a proper search precision and query latency.According to our experiment,we?nd that when Factor Approx reaches20,the search precision exceeds80%.However,the query latency does not in-crease signi?cantly,which is still much faster than the exact search.Moreover,with arbitrary k,the average Hamming distance of our approximate search result is always very close to that of the exact search(the error remains less than1).Therefore,we choose20as our default Factor Approx.

Optimization II:Eliminate empty lookups.:We?nd that most of the entries in each index hash table are empty with no items in it.For example,we have one billion items in the whole data set,and the substring length is

32.Then each index hash table has at least232?109

4 empty entries.In fact,according to our experiment,there are almost90%empty entries.Although RDMA reads are much faster than the Ethernet reads,they are still slower than local memory reads.Therefore,we should avoid looking up the empty entries in the distributed hash tables.We create a bitmap for each index hash table which records those empty entries and do the real RDMA get operations only if the binary index is in the hash ta-ble.We can also use a Bloom?lter to avoid reading these empty entries.According to our experiment,using a Bloom?lter is slower than using bitmap by18%while it saves the memory usage by46%.Since the memory us-age of bitmap does not increase with the image size and using bitmap can bring us a7.5x speedup of the search procedure,we use bitmap as our default choice.

4Evaluation

In this section,we evaluate the performance of VertiCut on the image search application compared with the tradi-tional horizontal cutting dispatch and aggregate scheme. Experimental Setup:We set up our experiments on a cluster of12servers.Each server is equipped with two AMD or Intel processors,16or32GB of memory,a Mel-lanox ConnectX-220Gbps In?niband HCA and an Intel gigabit Ethernet adapter.All servers run Ubuntu12.10 with the OFED3.2In?niband driver.

We use the one billion SIFT descriptors from the BIGANN dataset[7]and LSH[6]to map from high-dimensional data to binary codes.The default con?gu-ration of image search application follows that of[15]. The queries are generated by randomly choosing1000 images from the dataset.

We run each test case three times and take the average query latency of1000queries as our primary metric to measure the effectiveness of our system.

Scalability and Query Latency:To demonstrate the scalability of VertiCut,we?rst run a1000nearest neigh-bors search with vary data size.As the data size increases from10million to120million images,we also increases the number of servers from1to12so that each server always processes10million images.We compare three designs,VertiCut on In?niband,the traditional horizontal scheme on In?niband(just use Ethernet over In?niband since the network usage of this scheme is very small)and VertiCut on Ethernet,all of which use the same approxi-mate search algorithm with bitmap optimization.Figure 4shows that as the data size increases,the query latency of the traditional scheme increases rapidly due to the in-creased aggregation cost.Surprisingly,in VertiCut,the latency decreases.The reason why the query latency de-creases in VertiCut is that as the data increase,the num-ber of images in the same entry of a hash table also in-creases.Then searching for the?xed k nearest neighbors, the number of entries we need to enumerate decreases (This can be proved by the decreasing number of reads per query shown on the?gure4).This makes VertiCut more effective and scalable on the huge data set.Note that although the query latency of VertiCut on Ethernet has the same decline trend,it is still8times slower than VertiCut on In?niband and4.4times slower than tradi-tional scheme.

(in terms of bytes sent per query)with the number of servers increases.

Effects of k:To show the low query latency of Verti-Cut on In?niband in arbitrary k nearest neighbors search, we run a test on120million images using12servers with varying k(from1to1000).Figure5shows that VertiCut on In?niband is almost twice as fast as the tra-ditional scheme for arbitrary k although its network cost is about6times larger than that of traditional scheme, while VertiCut on Ethernet is much slower than the other two.This demonstrates that VertiCut on In?niband is the best scheme for the large scale multimedia search engine. Effects of Optimizations:To demonstrate the advan-tages of our two optimizations,we run k nearest neigh-bors search on120million images with and without our optimizations.We vary the k from1to1000,the compar-ison of our VertiCut,MIH(No optimization),MIH with approximate search optimization(Approximate KNN) and MIH with bitmap optimization(Bitmap)is shown in Figure6(Note that the y axis is in log scale).From the result,we can?nd that the approximate optimization improves the search speed by80times,the bitmap opti-mization improves25times,and our VertiCut achieves at least550times improvement.This veri?es that our two optimizations are quite reasonable and effective,which can make the distributed multimedia search much more scalable and practical in reality.

Figure as a function of k(the required number of neighbors return).

Figure of read operations with and without optimizations.

5Related Work

There have been several previous works attempting to provide distributed content-based image retrieval[2,5, 8,10,12,13,15,17–20].They can be divided into two categories:low dimensional and high dimensional ap-proaches.In low dimensional approaches,[5,18]focus on distributing search on peer-to-peer(P2P)networks based on Content-addressable Network(CAN)[16].M-CAN[5]uses a pivot-based method to map images from the metric space to a low dimensional vector space.RT-CAN[18]implements a variational R-tree on top of CAN using low dimensional data(e.g.?ve dimensions). [10,12,20]build the nearest neighbors search on top of distributed computing framework MapReduce[4]for low dimensional datasets(data with no more than30di-mensions).[10]constructs a multi-dimensional index us-ing R-tree.[12]uses a V oronoi diagram-based partition-ing to assign objects.[20]maps data into one dimension using space-?lling curves and transforms KNN joins into one-dimensional range searches.Although these low di-mensional approaches can do fast search in large scale data sets,they cannot achieve precise search results. For high dimensional datasets,there are three ma-jor approaches.The?rst one is Bag-of-features ap-proach[8,13,19],in which each image is represented as a histogram of occurrences of selected features(“vi-sual words”)and search is done by using an Inverted Index structure.Works belonging to this approach all use traditional horizontal cut scheme:each server stores and indexes a part of the dataset.We believe that our VertiCut can also achieve a better scalability for this ap-proach.The second one is distributed KD-tree approach.

[2]gives an implementation on MapReduce in which a master stores the root of the tree,while multiple leaf machines store the rest.When a query comes,the mas-ter forwards the query to a subset of the leaf machines. Unfortunately,this approach has high update cost:each time adding or removing an image,it needs to rebuild the tree.The third one is multiple index hashing ap-proach.[15]provides a distributed scheme for MIH al-gorithm which stores different index hash tables to dif-ferent machines.[17]uses the family of LSH functions based on p-stable distributions to conduct multiple hash tables and distributes them using MapReduce.As ex-plained before,this approach is not practical due to its large communication cost.

6Conclusions

With the rapid growth of multimedia information,multi-media retrieval has become more and more popular in the recent years.How to effectively distribute the search for the increasing huge data collections has become an im-portant challenge with immediate practical implications. In this paper,we present a fast high-dimensional mul-timedia search engine VertiCut based on the high per-formance computing network In?niband to address this challenge.Experiments show that our design can achieve a better scalability and lower response latency,which makes the multimedia retrieval simpler and more prac-tical in reality.

7Acknowledgments

This work is partially supported by the National High Technology Research and Development Program(“863”Program)of China under Grant No.2013AA013203,the National Natural Science Foundation of China Project 61170056and the China Scholarship Council(CSC No. 201306010143).

References

[1]A LEKSANDAR D RAGOJEVI,D.N.,AND C ASTRO,M.FaRM:

Fast remote memory.In USENIX Networked Systems Design and Implementation(NSDI)(2014).

[2]A LY,M.,M UNICH,M.,AND P ERONA,P.Distributed kd-trees

for retrieval from very large image collections.In Proceedings of the British Machine Vision Conference(BMVC)(2011).

[3]D EAN,J.Achieving rapid response times in large online ser-

vices.Berkeley AMP Lab Talk.

[4]D EAN,J.,AND G HEMAWAT,S.Mapreduce:Simpli?ed data

processing on large https://www.sodocs.net/doc/1d8152339.html,mun.ACM51,1(Jan2008), 107–113.

[5]F ALCHI,F.,G ENNARO,C.,AND Z EZULA,P.A contentaddress-

able network for similarity search in metric spaces.In Proceed-ings of conference on Databases,Information Systems,and Peer-to-Peer Computing(DBISP2P)(2007).

[6]I NDYK,P.,AND M OTWANI,R.Approximate nearest neighbors:

Towards removing the curse of dimensionality.In Proceedings of the30th Annual ACM Symposium on Theory of Computing (STOC)(1998).

[7]J EGOU,H.,T AVENARD,R.,D OUZE,M.,AND A MSALEG,L.

Searching in one billion vectors:Re-rank with source coding.In IEEE International Conference on Acoustics,Speech and Signal Processing(ICASSP)(2011).

[8]J I,R.,D UAN,L.-Y.,C HEN,J.,X IE,L.,Y AO,H.,AND G AO,

W.Learning to distribute vocabulary indexing for scalable visual search.IEEE Transactions on Multimedia15,1(Jan2013),153–166.

[9]L I,J.,L OO, B.T.,H ELLERSTEIN,J.,K AASHOEK, F.,

K ARGER,D.,AND M ORRIS,R.On the feasibility of peer-to-peer web indexing and search.In Proceedings of the2nd Inter-national Workshop on Peer-to-Peer Systems(IPTPS)(Feb.2003).

[10]L IAO,H.,H AN,J.,AND F ANG,J.Multi-dimensional index on

hadoop distributed?le system.In Proceedings of IEEE Fifth In-ternational Conference on Networking,Architecture and Storage (NAS)(2010).

[11]L OWE,D.Object recognition from local scale-invariant features.

In Proceedings of the Seventh IEEE International Conference on Computer Vision(1999),vol.2,pp.1150–1157.

[12]L U,W.,S HEN,Y.,C HEN,S.,AND O OI,B.C.Ef?cient pro-

cessing of k nearest neighbor joins using mapreduce.Proc.VLDB Endow.5,10(Jun2012),1016–1027.

[13]M AR′E E,R.,D ENIS,P.,W EHENKEL,L.,AND G EURTS,P.In-

cremental indexing and distributed image search using shared randomized vocabularies.In Proceedings of the International Conference on Multimedia Information Retrieval(MIR)(2010).

[14]M ITCHELL,C.,G ENG,Y.,AND L I,https://www.sodocs.net/doc/1d8152339.html,ing one-sided rdma

reads to build a fast,cpu-ef?cient key-value store.In Proceed-ings of the USENIX Conference on Annual Technical Conference (ATC)(2013).

[15]N OROUZI,M.,P UNJANI,A.,AND F LEET,D.Fast search in

hamming space with multi-index hashing.In IEEE Conference on Computer Vision and Pattern Recognition(CVPR)(2012). [16]R ATNASAMY,S.,F RANCIS,P.,H ANDLEY,M.,K ARP,R.,AND

S HENKER,S.A scalable content-addressable network.In Pro-ceedings of conference on Applications,Technologies,Architec-tures,and Protocols for Computer Communications(SIGCOMM (2001).

[17]S TUPAR,A.,S TUPAR,A.,AND S CHENKEL,R.Rankreduce

processing k-nearest neighbor queries on top of mapreduce.In Proceedings of Workshop on Large-Scale Distributed Systems for Information Retrieval(LSDS-IR)(2010).[18]W ANG,J.,W U,S.,G AO,H.,L I,J.,AND O OI,B.C.Index-

ing multi-dimensional data in a cloud system.In Proceedings of the ACM SIGMOD International Conference on Management of Data(2010).

[19]Y AN,T.,G ANESAN,D.,AND M ANMATHA,R.Distributed im-

age search in camera sensor networks.In Proceedings of the6th ACM Conference on Embedded Network Sensor Systems(SenSys) (2008).

[20]Z HANG,C.,L I,F.,AND J ESTES,J.Ef?cient parallel knn joins

for large data in mapreduce.In Proceedings of the15th Inter-national Conference on Extending Database Technology(EDBT) (2012).